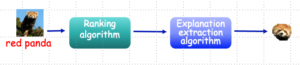

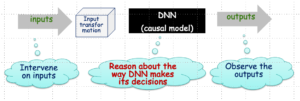

ReX is a causal explainability tool for image classifiers. ReX is black-box, that is, agnostic to the internal structure of the classifier. We assume that we can modify the inputs and send them to the classifier, observing the output. ReX outperforms other tools on single explanations, non-contiguous explanations (for partially obscured images), and multiple explanations.

Variations of ReX

- ReX for explaining the output of image classifiers

-

Multi-ReX for extracting multiple explanations

- Med-Rex for medical image classifiers

- SPEC-ReX for Raman spectroscopy

- T-ReX for tabular data

- 3D-ReX for 3D images

Other tools on our platform

- Explanations of absence

-

IncX – real-time incremental explanations for videos

Assumptions

Presentations

Papers

- Multiple Different Explanations for Image Classifiers. Under review. This paper introduces MULTI-ReX for multiple explanations.

- Explanations for Occluded Images. In ICCV’21. This paper introduces causality to the tool. Note: the tool is called DC-Causal in this paper.

- Explaining Image Classifiers using Statistical Fault Localization. In ECCV’20. The first paper on ReX. Note: the tool is called DeepCover in this paper.

Code

Contact me to get a working version.

Team

- Hana Chockler (head of group)

-

David A. Kelly (postdoctoral researcher)

- Nathan Blake (postdoctoral researcher)

- Aditi Ramaswamy (PhD student)

- Fathima Mohamed (PhD student)

- Akchunya Chanchal (PhD student)

- Santiago Calderón Peña (former MSc student, currently remote collaborator)

- Melane Navaratnarajah (research MSci student)

- Stav Armoni-Friedmann (UG student)

- Aadit Devidas-Chavan (Msc student)

External collaborators

- Daniel Kroening, AWS

- Anita Grigoriadis, Cancer research, KCL

- Tom Booth, Cancer research, KCL

- Youcheng Sun, University of Manchester